Category: Data Sets

RAMP – Get RAMP Data

June 28, 2023

GISMO — Data Products

October 24, 2022

Global Ice Sheet Mapping Orbiter (GISMO)

January 23, 2023

Glacier Speed – How to Cite

March 8, 2024

Glacier Speed – Applications

January 22, 2023

Glacier Speed – Download Data

October 24, 2022

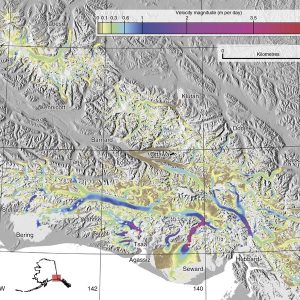

Glacier Speed – About the Glacier-Flow-Speed Dataset

January 22, 2023

Glacier Speed

March 8, 2024

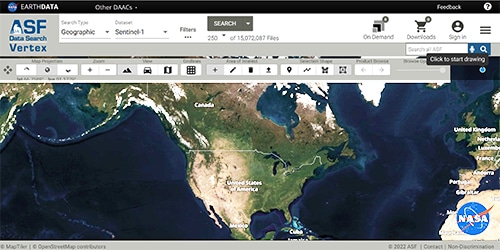

ARIA Sentinel-1 Geocoded Unwrapped Interferograms

May 24, 2023